The central component of TAAE 2 is the buffer stack, a utility that manages a pool of AudioBufferList structures, which in turn store audio for the current render cycle.

The buffer stack is a production line. At the beginning of each render cycle, the buffer stack starts empty; at the end of the render cycle, the buffer stack is reset to this empty state. In between, your code will manipulate the stack to produce, manipulate, analyse, record and ultimately output audio.

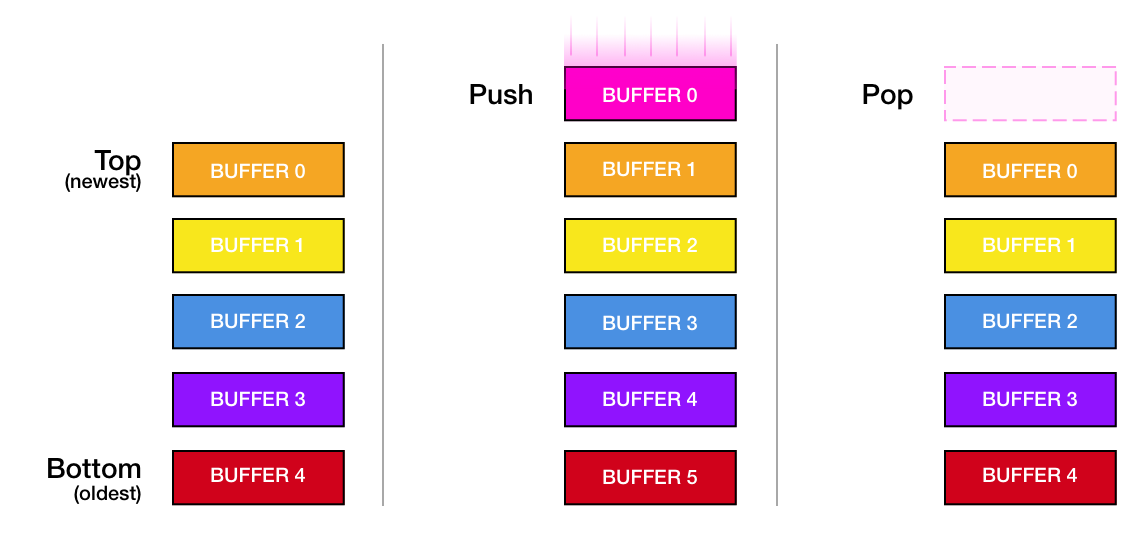

Think of the stack as a stacked collection of buffers, one on top of the other, with the oldest at the bottom, and the newest at the top. You can push buffers on top of the stack, and pop them off, and you can inspect any buffer within the stack:

Operations

Push buffers onto the stack to generate new audio. Get existing buffers from the stack and edit them to apply effects, analyse, or record audio. Mix buffers on the stack to combine multiple audio sources. Output buffers to the current output to play their audio out loud. Pop buffers off the stack when you're done with them.

Each buffer on the stack can be mono, stereo, or multi-channel audio, and every buffer has the same number of frames of audio: that is, the number of frames requested by the output for the current render cycle.

| AEBufferStackPush() | Push one or more stereo buffers onto the stack.

|

|---|---|

| AEBufferStackPop() | Remove one or more buffers from the top of the stack.

|

| AEBufferStackMix() | Push a buffer that consists of the mixed audio from the top two or more buffers, and pop the original buffers.

|

| AEBufferStackApplyFaders() | Apply volume and balance controls to the top buffer. |

| AEBufferStackSilence() | Fill the top buffer with silence (zero samples). |

| AEBufferStackSwap() | Swap the top two stack items. |

When you're ready to output a stack item, use AERenderContextOutput() to send the buffer to the output; it will be mixed with whatever's already on the output. Then optionally use AEBufferStackPop() to throw the buffer away.

Most interaction with the stack is done through modules, individual units of processing which can do anything from processing audio (i.e. pushing new buffers on the stack), adding effects (getting stack items and modifying the audio within), analysing or recording audio (getting stack items and doing something with the contents), or mixing audio together (popping stack items off, and pushing new buffers). You create modules on the main thread when initialising your audio engine, or when changing state, and then process them from within your render loop using AEModuleProcess(). The modules, in turn, interact with the stack; pushing, getting and popping buffers.

An Example

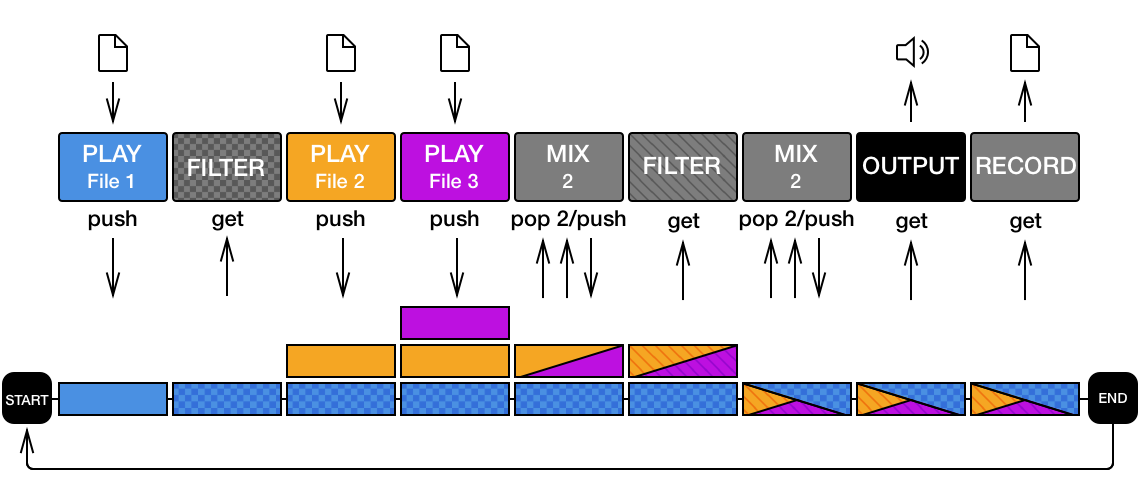

The following example takes three audio files, mixes and applies effects (we apply one effect to one player, and a second effect to the other two), then records and outputs the result. This perfoms the equivalent of the following graph:

First, some setup. We'll create an instance of AERenderer, which will drive our main render loop. Then we create an instance of AEAudioUnitOutput, which is our interface to the system audio output. Finally, we'll create a number of modules that we shall use. Note that each module maintains a reference to its controlling renderer, so it can track important changes such as sample rate.

Now, we can provide a render block, which contains the implementation for the audio pipeline. We run each module in turn, in the order that will provide the desired result:

Note that we interact with the rendering environment via the AERenderContext; this provides us with a variety of important state information for the current render, as well as access to the buffer stack.

Finally, when we're initialized, we start the output, and the players:

We should hear all three audio file players, with a bandpass effect on the first, and a delay effect on the other two. We'll also get a recorded file which contains what we heard.

For a more sophisticated example, take a look at the sample app that comes with TAAE 2.

More documentation coming soon.